Very Important Question of Artificial Intelligence all Unit covered Must read scoring Good Marks in Exam as per AKTU Pattern

INTRODUCTION

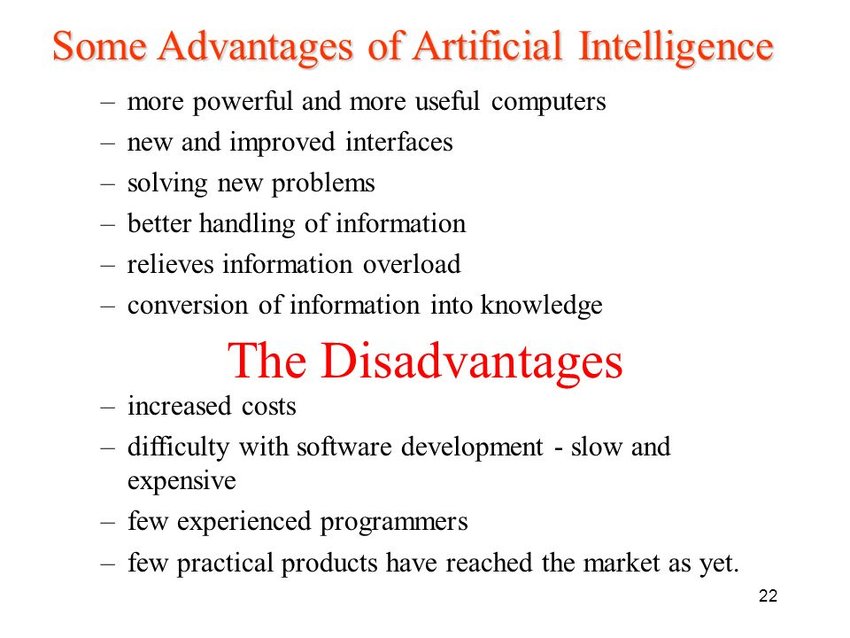

- Define AI Its advantage foundation and branches of AI

- Define agent and Intelligent agent Also describe kinds of agent programs

- NLP Natural Language Processing

- Turing Test

Artificial intelligence

In computer science, artificial intelligence, sometimes called machine intelligence, is intelligence demonstrated by machines, in contrast to the natural intelligence displayed by humans and other animals

Artificial Intelligence is a way of making a computer, a computer-controlled robot, or a software think intelligently, in the similar manner the intelligent humans think.

- Consumer goods. Using natural language processing, machine learning and advanced analytics, Hello Barbie listens and responds to a child. ...

- Creative Arts. ...

- Energy. ...

- Financial Services. ...

- Healthcare. ...

- Manufacturing. ...

- Media. ...

- Retail.

intelligent agent

In artificial intelligence, an intelligent agent (IA) is an autonomous entity which observes through sensors and acts upon an environment using actuators (i.e. it is an agent) and directs its activity towards achieving goals (i.e. it is "rational", as defined in economics).

Russell & Norvig (2003) group agents into five classes based on their degree of perceived intelligence and capability:

- simple reflex agents.

- model-based reflex agents.

- goal-based agents.

- utility-based agents.

- learning agents.

Agent Terminology

- Performance Measure of Agent − It is the criteria, which determines how successful an agent is.

- Behavior of Agent − It is the action that agent performs after any given sequence of percepts.

- Percept − It is agent’s perceptual inputs at a given instance.

- Percept Sequence − It is the history of all that an agent has perceived till date.

- Agent Function − It is a map from the precept sequence to an action.

Rationality

Rationality is nothing but status of being reasonable, sensible, and having good sense of judgment.

Rationality is concerned with expected actions and results depending upon what the agent has perceived. Performing actions with the aim of obtaining useful information is an important part of rationality.

What is Ideal Rational Agent?

An ideal rational agent is the one, which is capable of doing expected actions to maximize its performance measure, on the basis of −

- Its percept sequence

- Its built-in knowledge base

Rationality of an agent depends on the following −

- The performance measures, which determine the degree of success.

- Agent’s Percept Sequence till now.

- The agent’s prior knowledge about the environment.

- The actions that the agent can carry out.

A rational agent always performs right action, where the right action means the action that causes the agent to be most successful in the given percept sequence. The problem the agent solves is characterized by Performance Measure, Environment, Actuators, and Sensors (PEAS).

The Structure of Intelligent Agents

Agent’s structure can be viewed as −

- Agent = Architecture + Agent Program

- Architecture = the machinery that an agent executes on.

- Agent Program = an implementation of an agent function.

Simple Reflex Agents

- They choose actions only based on the current percept.

- They are rational only if a correct decision is made only on the basis of current precept.

- Their environment is completely observable

Natural language processing

Natural language processing is a subfield of computer science, information engineering, and artificial intelligence concerned with the interactions between computers and human languages, in particular how to program computers to process and analyze large amounts of natural language data

Natural Language Processing Summary. The field of study that focuses on the interactions between human language and computers is called Natural Language Processing, or NLP for short. It sits at the intersection of computer science, artificial intelligence, and computational linguistics

Advantage of NLP

NLP advantages include fast processing, user-friendliness, inferring solutions which may or may not be created previously and the most important is an ability to converse in the language which you love (e.g. English). NLP has a wide variety of applications in different areas like health, education, government etc.

Turing test In artificial intelligence (AI), a Turing Test is a method of inquiry for determining whether or not a computer is capable of thinking like a human being. The test is named after Alan Turing, an English mathematician who pioneered machine learning during the 1940s and 1950s.

Common understanding has it that the purpose of the Turing test is not specifically to determine whether a computer is able to fool an interrogator into believing that it is a human, but rather whether a computer could imitate a human.

Importance of Turing Test

The Turing Test proposed by Alan Turing has been one of his best works and it has been a significant contribution to the field of Artificial Intelligence. He got the ball rolling for Artificial Intelligence by bringing up the thought that machines too can beintelligent.

AI - Popular Search Algorithms

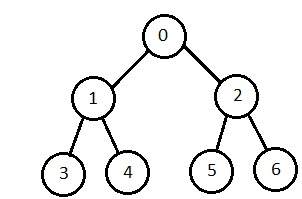

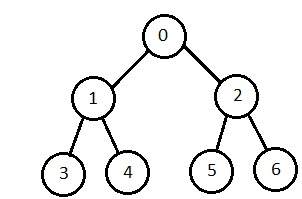

Breadth-First Search

It starts from the root node, explores the neighboring nodes first and moves towards the next level neighbors. It generates one tree at a time until the solution is found. It can be implemented using FIFO queue data structure. This method provides shortest path to the solution.

If branching factor (average number of child nodes for a given node) = b and depth = d, then number of nodes at level d = bd.

The total no of nodes created in worst case is b + b2 + b3 + … + bd.

Disadvantage − Since each level of nodes is saved for creating next one, it consumes a lot of memory space. Space requirement to store nodes is exponential.

Its complexity depends on the number of nodes. It can check duplicate nodes.

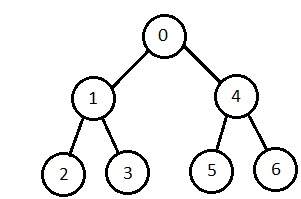

Depth-First Search

It is implemented in recursion with LIFO stack data structure. It creates the same set of nodes as Breadth-First method, only in the different order.

As the nodes on the single path are stored in each iteration from root to leaf node, the space requirement to store nodes is linear. With branching factor band depth as m, the storage space is bm.

Disadvantage − This algorithm may not terminate and go on infinitely on one path. The solution to this issue is to choose a cut-off depth. If the ideal cut-off is d, and if chosen cut-off is lesser than d, then this algorithm may fail. If chosen cut-off is more than d, then execution time increases.

Its complexity depends on the number of paths. It cannot check duplicate nodes.

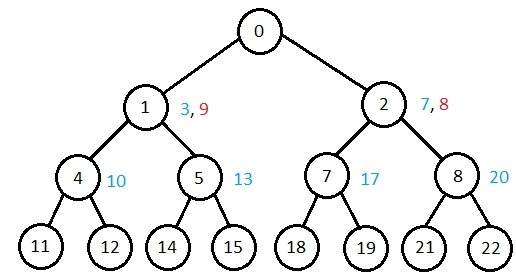

Bidirectional Search

It searches forward from initial state and backward from goal state till both meet to identify a common state.

The path from initial state is concatenated with the inverse path from the goal state. Each search is done only up to half of the total path.

Uniform Cost Search

Sorting is done in increasing cost of the path to a node. It always expands the least cost node. It is identical to Breadth First search if each transition has the same cost.

It explores paths in the increasing order of cost.

Disadvantage − There can be multiple long paths with the cost ≤ C*. Uniform Cost search must explore them all.

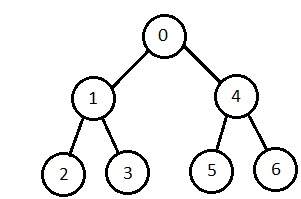

Iterative Deepening Depth-First Search

It performs depth-first search to level 1, starts over, executes a complete depth-first search to level 2, and continues in such way till the solution is found.

It never creates a node until all lower nodes are generated. It only saves a stack of nodes. The algorithm ends when it finds a solution at depth d. The number of nodes created at depth d is bd and at depth d-1 is bd-1.

Comparison of Various Algorithms Complexities

Let us see the performance of algorithms based on various criteria −

| Criterion | Breadth First | Depth First | Bidirectional | Uniform Cost | Interactive Deepening |

|---|---|---|---|---|---|

| Time | bd | bm | bd/2 | bd | bd |

| Space | bd | bm | bd/2 | bd | bd |

| Optimality | Yes | No | Yes | Yes | Yes |

| Completeness | Yes | No | Yes | Yes | Yes |

Informed (Heuristic) Search Strategies

To solve large problems with large number of possible states, problem-specific knowledge needs to be added to increase the efficiency of search algorithms.

Heuristic Evaluation Functions

They calculate the cost of optimal path between two states. A heuristic function for sliding-tiles games is computed by counting number of moves that each tile makes from its goal state and adding these number of moves for all tiles.

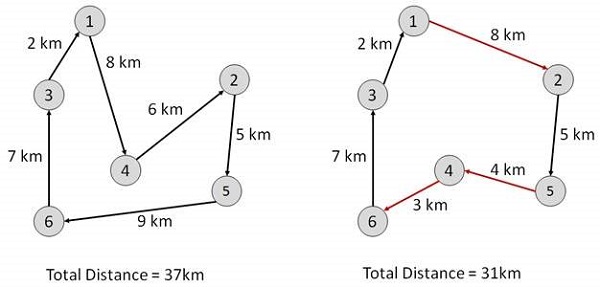

Pure Heuristic Search

It expands nodes in the order of their heuristic values. It creates two lists, a closed list for the already expanded nodes and an open list for the created but unexpanded nodes.

In each iteration, a node with a minimum heuristic value is expanded, all its child nodes are created and placed in the closed list. Then, the heuristic function is applied to the child nodes and they are placed in the open list according to their heuristic value. The shorter paths are saved and the longer ones are disposed.

A * Search

It is best-known form of Best First search. It avoids expanding paths that are already expensive, but expands most promising paths first.

f(n) = g(n) + h(n), where

- g(n) the cost (so far) to reach the node

- h(n) estimated cost to get from the node to the goal

- f(n) estimated total cost of path through n to goal. It is implemented using priority queue by increasing f(n).

Greedy Best First Search

It expands the node that is estimated to be closest to goal. It expands nodes based on f(n) = h(n). It is implemented using priority queue.

Disadvantage − It can get stuck in loops. It is not optimal.

Local Search Algorithms

They start from a prospective solution and then move to a neighboring solution. They can return a valid solution even if it is interrupted at any time before they end.

Hill-Climbing Search

It is an iterative algorithm that starts with an arbitrary solution to a problem and attempts to find a better solution by changing a single element of the solution incrementally. If the change produces a better solution, an incremental change is taken as a new solution. This process is repeated until there are no further improvements.

function Hill-Climbing (problem), returns a state that is a local maximum.

inputs: problem, a problem

local variables: current, a node

neighbor, a node

current <-Make_Node(Initial-State[problem])

loop

do neighbor <- a highest_valued successor of current

if Value[neighbor] ≤ Value[current] then

return State[current]

current <- neighbor

end

Disadvantage − This algorithm is neither complete, nor optimal.

Local Beam Search

In this algorithm, it holds k number of states at any given time. At the start, these states are generated randomly. The successors of these k states are computed with the help of objective function. If any of these successors is the maximum value of the objective function, then the algorithm stops.

Otherwise the (initial k states and k number of successors of the states = 2k) states are placed in a pool. The pool is then sorted numerically. The highest k states are selected as new initial states. This process continues until a maximum value is reached.

function BeamSearch( problem, k), returns a solution state.

start with k randomly generated states loop generate all successors of all k states if any of the states = solution, then return the state else select the k best successors end

Simulated Annealing

Annealing is the process of heating and cooling a metal to change its internal structure for modifying its physical properties. When the metal cools, its new structure is seized, and the metal retains its newly obtained properties. In simulated annealing process, the temperature is kept variable.

We initially set the temperature high and then allow it to ‘cool' slowly as the algorithm proceeds. When the temperature is high, the algorithm is allowed to accept worse solutions with high frequency.

Start

- Initialize k = 0; L = integer number of variables;

- From i → j, search the performance difference Δ.

- If Δ <= 0 then accept else if exp(-Δ/T(k)) > random(0,1) then accept;

- Repeat steps 1 and 2 for L(k) steps.

- k = k + 1;

Repeat steps 1 through 4 till the criteria is met.

End

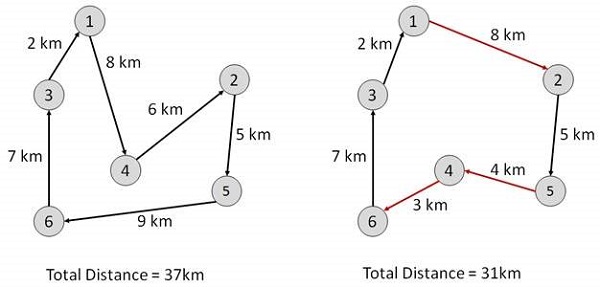

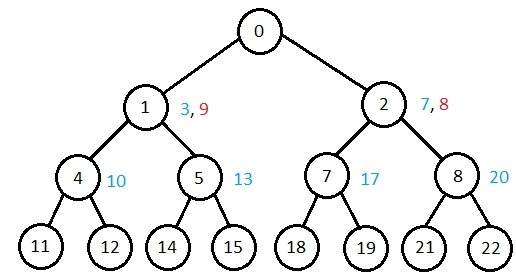

Travelling Salesman Problem

In this algorithm, the objective is to find a low-cost tour that starts from a city, visits all cities en-route exactly once and ends at the same starting city.

Start Find out all (n -1)! Possible solutions, where n is the total number of cities. Determine the minimum cost by finding out the cost of each of these (n -1)! solutions. Finally, keep the one with the minimum cost. end

Natural Language Processing (NLP) refers to AI method of communicating with an intelligent systems using a natural language such as English.

Processing of Natural Language is required when you want an intelligent system like robot to perform as per your instructions, when you want to hear decision from a dialogue based clinical expert system, etc.

The field of NLP involves making computers to perform useful tasks with the natural languages humans use. The input and output of an NLP system can be −

- Speech

- Written Text

Components of NLP

There are two components of NLP as given −

Natural Language Understanding (NLU)

Understanding involves the following tasks −

- Mapping the given input in natural language into useful representations.

- Analyzing different aspects of the language.

Natural Language Generation (NLG)

It is the process of producing meaningful phrases and sentences in the form of natural language from some internal representation.

It involves −

- Text planning − It includes retrieving the relevant content from knowledge base.

- Sentence planning − It includes choosing required words, forming meaningful phrases, setting tone of the sentence.

- Text Realization − It is mapping sentence plan into sentence structure.

The NLU is harder than NLG.

Difficulties in NLU

NL has an extremely rich form and structure.

It is very ambiguous. There can be different levels of ambiguity −

- Lexical ambiguity − It is at very primitive level such as word-level.

- For example, treating the word “board” as noun or verb?

- Syntax Level ambiguity − A sentence can be parsed in different ways.

- For example, “He lifted the beetle with red cap.” − Did he use cap to lift the beetle or he lifted a beetle that had red cap?

- Referential ambiguity − Referring to something using pronouns. For example, Rima went to Gauri. She said, “I am tired.” − Exactly who is tired?

- One input can mean different meanings.

- Many inputs can mean the same thing.

NLP Terminology

- Phonology − It is study of organizing sound systematically.

- Morphology − It is a study of construction of words from primitive meaningful units.

- Morpheme − It is primitive unit of meaning in a language.

- Syntax − It refers to arranging words to make a sentence. It also involves determining the structural role of words in the sentence and in phrases.

- Semantics − It is concerned with the meaning of words and how to combine words into meaningful phrases and sentences.

- Pragmatics − It deals with using and understanding sentences in different situations and how the interpretation of the sentence is affected.

- Discourse − It deals with how the immediately preceding sentence can affect the interpretation of the next sentence.

- World Knowledge − It includes the general knowledge about the world.

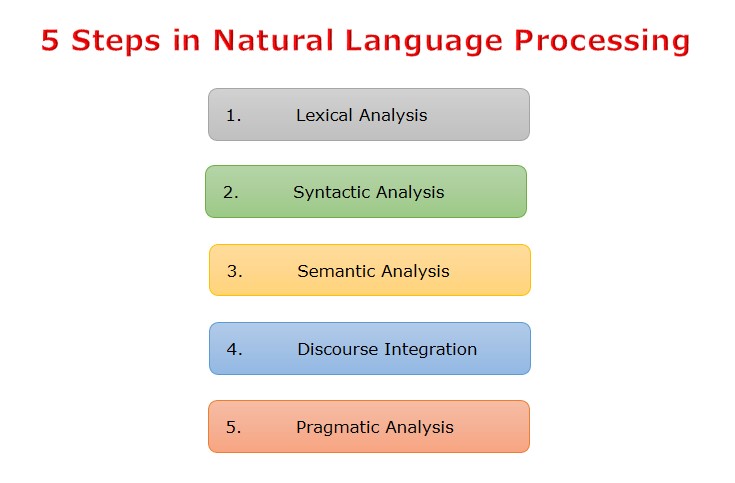

Steps in NLP

There are general five steps −

- Lexical Analysis − It involves identifying and analyzing the structure of words. Lexicon of a language means the collection of words and phrases in a language. Lexical analysis is dividing the whole chunk of txt into paragraphs, sentences, and words.

- Syntactic Analysis (Parsing) − It involves analysis of words in the sentence for grammar and arranging words in a manner that shows the relationship among the words. The sentence such as “The school goes to boy” is rejected by English syntactic analyzer.

- Semantic Analysis − It draws the exact meaning or the dictionary meaning from the text. The text is checked for meaningfulness. It is done by mapping syntactic structures and objects in the task domain. The semantic analyzer disregards sentence such as “hot ice-cream”.

- Discourse Integration − The meaning of any sentence depends upon the meaning of the sentence just before it. In addition, it also brings about the meaning of immediately succeeding sentence.

- Pragmatic Analysis − During this, what was said is re-interpreted on what it actually meant. It involves deriving those aspects of language which require real world knowledge.

Implementation Aspects of Syntactic Analysis

There are a number of algorithms researchers have developed for syntactic analysis, but we consider only the following simple methods −

- Context-Free Grammar

- Top-Down Parser

Let us see them in detail −

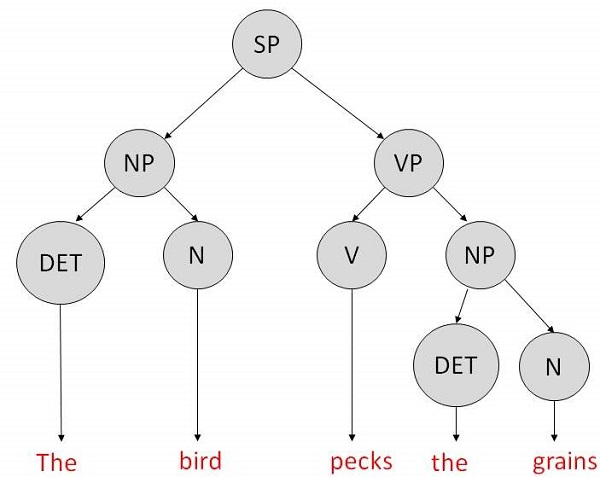

Context-Free Grammar

It is the grammar that consists rules with a single symbol on the left-hand side of the rewrite rules. Let us create grammar to parse a sentence −

“The bird pecks the grains”

Articles (DET) − a | an | the

Nouns − bird | birds | grain | grains

Noun Phrase (NP) − Article + Noun | Article + Adjective + Noun

= DET N | DET ADJ N

Verbs − pecks | pecking | pecked

Verb Phrase (VP) − NP V | V NP

Adjectives (ADJ) − beautiful | small | chirping

The parse tree breaks down the sentence into structured parts so that the computer can easily understand and process it. In order for the parsing algorithm to construct this parse tree, a set of rewrite rules, which describe what tree structures are legal, need to be constructed.

These rules say that a certain symbol may be expanded in the tree by a sequence of other symbols. According to first order logic rule, if there are two strings Noun Phrase (NP) and Verb Phrase (VP), then the string combined by NP followed by VP is a sentence. The rewrite rules for the sentence are as follows −

S → NP VP

NP → DET N | DET ADJ N

VP → V NP

Lexocon −

DET → a | the

ADJ → beautiful | perching

N → bird | birds | grain | grains

V → peck | pecks | pecking

The parse tree can be created as shown −

Now consider the above rewrite rules. Since V can be replaced by both, "peck" or "pecks", sentences such as "The bird peck the grains" can be wrongly permitted. i. e. the subject-verb agreement error is approved as correct.

Merit − The simplest style of grammar, therefore widely used one.

Demerits −

- They are not highly precise. For example, “The grains peck the bird”, is a syntactically correct according to parser, but even if it makes no sense, parser takes it as a correct sentence.

- To bring out high precision, multiple sets of grammar need to be prepared. It may require a completely different sets of rules for parsing singular and plural variations, passive sentences, etc., which can lead to creation of huge set of rules that are unmanageable.

Top-Down Parser

Here, the parser starts with the S symbol and attempts to rewrite it into a sequence of terminal symbols that matches the classes of the words in the input sentence until it consists entirely of terminal symbols.

These are then checked with the input sentence to see if it matched. If not, the process is started over again with a different set of rules. This is repeated until a specific rule is found which describes the structure of the sentence.

Merit − It is simple to implement.

Demerits −

- It is inefficient, as the search process has to be repeated if an error occurs.

- Slow speed of working.

Artificial Intelligence - Fuzzy Logic Systems

- Fuzzy Logic Systems (FLS) produce acceptable but definite output in response to incomplete, ambiguous, distorted, or inaccurate (fuzzy) input.

What is Fuzzy Logic?

Fuzzy Logic (FL) is a method of reasoning that resembles human reasoning. The approach of FL imitates the way of decision making in humans that involves all intermediate possibilities between digital values YES and NO.The conventional logic block that a computer can understand takes precise input and produces a definite output as TRUE or FALSE, which is equivalent to human’s YES or NO.The inventor of fuzzy logic, Lotfi Zadeh, observed that unlike computers, the human decision making includes a range of possibilities between YES and NO, such as −CERTAINLY YES POSSIBLY YES CANNOT SAY POSSIBLY NO CERTAINLY NO The fuzzy logic works on the levels of possibilities of input to achieve the definite output.Implementation

- It can be implemented in systems with various sizes and capabilities ranging from small micro-controllers to large, networked, workstation-based control systems.

- It can be implemented in hardware, software, or a combination of both.

Why Fuzzy Logic?

Fuzzy logic is useful for commercial and practical purposes.- It can control machines and consumer products.

- It may not give accurate reasoning, but acceptable reasoning.

- Fuzzy logic helps to deal with the uncertainty in engineering.

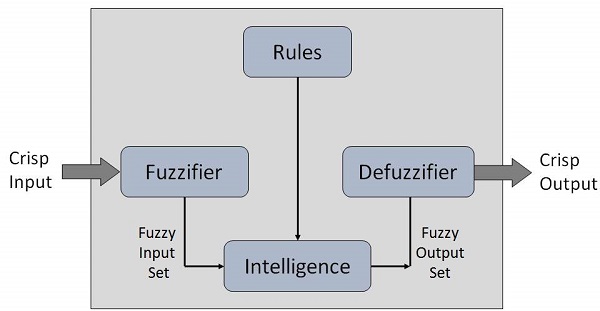

Fuzzy Logic Systems Architecture

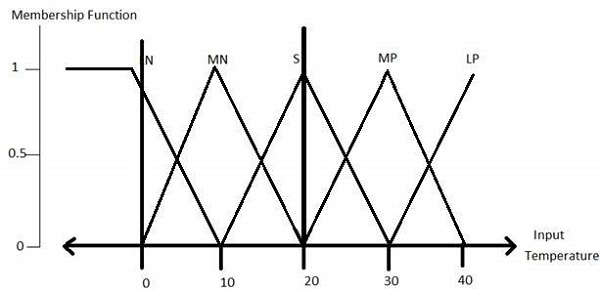

It has four main parts as shown −- Fuzzification Module − It transforms the system inputs, which are crisp numbers, into fuzzy sets. It splits the input signal into five steps such as −

LP x is Large Positive MP x is Medium Positive S x is Small MN x is Medium Negative LN x is Large Negative - Knowledge Base − It stores IF-THEN rules provided by experts.

- Inference Engine − It simulates the human reasoning process by making fuzzy inference on the inputs and IF-THEN rules.

- Defuzzification Module − It transforms the fuzzy set obtained by the inference engine into a crisp value.

The membership functions work on fuzzy sets of variables.

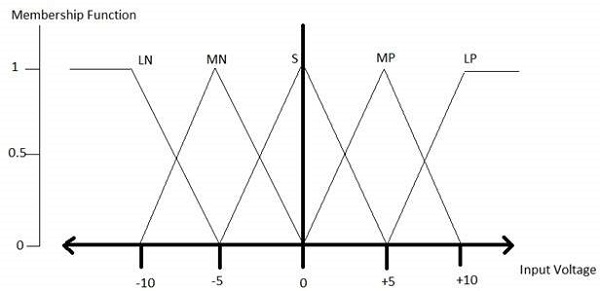

The membership functions work on fuzzy sets of variables.Membership Function

Membership functions allow you to quantify linguistic term and represent a fuzzy set graphically. A membership function for a fuzzy set A on the universe of discourse X is defined as μA:X → [0,1].Here, each element of X is mapped to a value between 0 and 1. It is called membership value or degree of membership. It quantifies the degree of membership of the element in X to the fuzzy set A.- x axis represents the universe of discourse.

- y axis represents the degrees of membership in the [0, 1] interval.

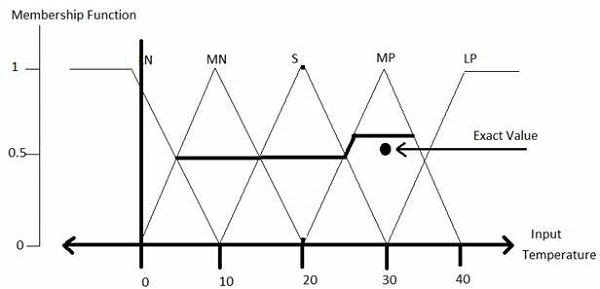

There can be multiple membership functions applicable to fuzzify a numerical value. Simple membership functions are used as use of complex functions does not add more precision in the output.All membership functions for LP, MP, S, MN, and LN are shown as below − The triangular membership function shapes are most common among various other membership function shapes such as trapezoidal, singleton, and Gaussian.Here, the input to 5-level fuzzifier varies from -10 volts to +10 volts. Hence the corresponding output also changes.

The triangular membership function shapes are most common among various other membership function shapes such as trapezoidal, singleton, and Gaussian.Here, the input to 5-level fuzzifier varies from -10 volts to +10 volts. Hence the corresponding output also changes.Example of a Fuzzy Logic System

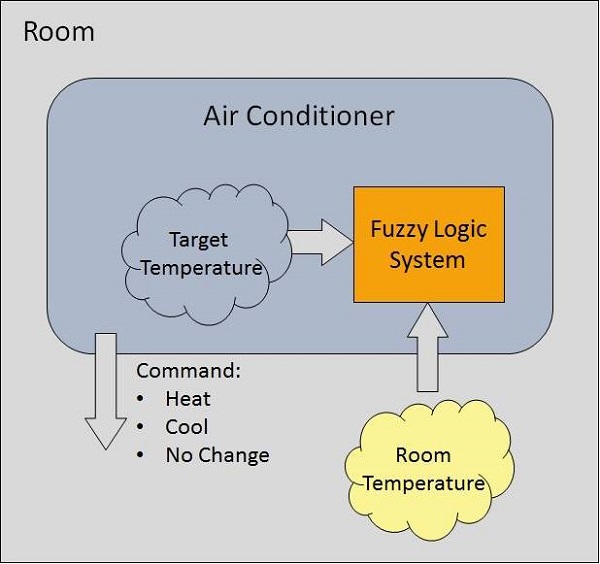

Let us consider an air conditioning system with 5-level fuzzy logic system. This system adjusts the temperature of air conditioner by comparing the room temperature and the target temperature value.

Algorithm

- Define linguistic Variables and terms (start)

- Construct membership functions for them. (start)

- Construct knowledge base of rules (start)

- Convert crisp data into fuzzy data sets using membership functions. (fuzzification)

- Evaluate rules in the rule base. (Inference Engine)

- Combine results from each rule. (Inference Engine)

- Convert output data into non-fuzzy values. (defuzzification)

Development

Step 1 − Define linguistic variables and termsLinguistic variables are input and output variables in the form of simple words or sentences. For room temperature, cold, warm, hot, etc., are linguistic terms.Temperature (t) = {very-cold, cold, warm, very-warm, hot}Every member of this set is a linguistic term and it can cover some portion of overall temperature values.Step 2 − Construct membership functions for themThe membership functions of temperature variable are as shown − Step3 − Construct knowledge base rulesCreate a matrix of room temperature values versus target temperature values that an air conditioning system is expected to provide.

Step3 − Construct knowledge base rulesCreate a matrix of room temperature values versus target temperature values that an air conditioning system is expected to provide.RoomTemp. /Target Very_Cold Cold Warm Hot Very_Hot Very_Cold No_Change Heat Heat Heat Heat Cold Cool No_Change Heat Heat Heat Warm Cool Cool No_Change Heat Heat Hot Cool Cool Cool No_Change Heat Very_Hot Cool Cool Cool Cool No_Change Build a set of rules into the knowledge base in the form of IF-THEN-ELSE structures.Sr. No. Condition Action 1 IF temperature=(Cold OR Very_Cold) AND target=Warm THEN Heat 2 IF temperature=(Hot OR Very_Hot) AND target=Warm THEN Cool 3 IF (temperature=Warm) AND (target=Warm) THEN No_Change Step 4 − Obtain fuzzy valueFuzzy set operations perform evaluation of rules. The operations used for OR and AND are Max and Min respectively. Combine all results of evaluation to form a final result. This result is a fuzzy value.Step 5 − Perform defuzzificationDefuzzification is then performed according to membership function for output variable.

Application Areas of Fuzzy Logic

The key application areas of fuzzy logic are as given −Automotive Systems- Automatic Gearboxes

- Four-Wheel Steering

- Vehicle environment control

Consumer Electronic Goods- Hi-Fi Systems

- Photocopiers

- Still and Video Cameras

- Television

Domestic Goods- Microwave Ovens

- Refrigerators

- Toasters

- Vacuum Cleaners

- Washing Machines

Environment Control- Air Conditioners/Dryers/Heaters

- Humidifiers

Advantages of FLSs

- Mathematical concepts within fuzzy reasoning are very simple.

- You can modify a FLS by just adding or deleting rules due to flexibility of fuzzy logic.

- Fuzzy logic Systems can take imprecise, distorted, noisy input information.

- FLSs are easy to construct and understand.

- Fuzzy logic is a solution to complex problems in all fields of life, including medicine, as it resembles human reasoning and decision making.

Disadvantages of FLSs

- There is no systematic approach to fuzzy system designing.

- They are understandable only when simple.

- They are suitable for the problems which do not need high accuracy.

ML | What is Machine Learning ?

Arthur Samuel, a pioneer in the field of artificial intelligence and computer gaming, coined the term “Machine Learning”. He defined machine learning as – “Field of study that gives computers the capability to learn without being explicitly programmed”.

In a very layman manner, Machine Learning(ML) can be explained as automating and improving the learning process of computers based on their experiences without being actually programmed i.e. without any human assistance. The process starts with feeding a good quality data and then training our machines(computers) by building machine learning models using the data and different algorithms. The choice of algorithms depends on what type of data do we have and what kind of task we are trying to automate.

Example: Training of students during exam.

While preparing for the exams students don’t actually cram the subject but try to learn it with complete understanding. Before examination, they feed their machine(brain) with good amount of high quality data (questions and answers from different books or teachers notes or online video lectures). Actually, they are training their brain with input as well as output i.e. what kind of approach or logic do they have to solve different kind of questions. Each time they solve practice test papers, and find the performance (accuracy /score) by comparing answers with answer key given, Gradually, the performance keeps on increasing, gaining more confidence with the adopted approach. That’s how actually models are built, train machine with data (both inputs and outputs are given to model) and when the time comes test on data (with input only) and achieve our model scores by comparing its answer with actual output which have not been fed while training. Researchers are working with assiduous efforts to improve algorithms, techniques so that these models perform even much better

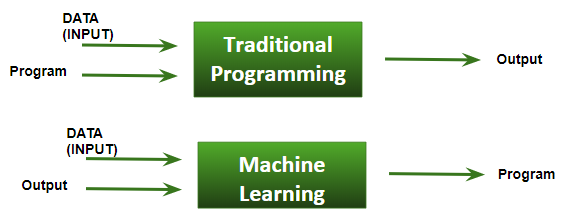

. Basic Difference in ML and Traditional Programming?

Basic Difference in ML and Traditional Programming?- Traditional Programming : We feed in DATA (Input) + PROGRAM (logic), run it on machine and get output.

- Machine Learning : We feed in DATA(Input) + Output, run it on machine during training and the machine creates its own program(logic), which can be evaluated while testing.

What does exactly learning means for a computer?A computer is said to be learning from Experiences with respect to some class of Tasks, if its performance in a given Task improves with the Experience.A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience EExample: playing checkers.

E = the experience of playing many games of checkers

T = the task of playing checkers.

P = the probability that the program will win the next gameIn general, any machine learning problem can be assigned to one of two broad classifications:

Supervised learning and Unsupervised learning.How things work in reality:-- Talking about online shopping, there are millions of users with unlimited range of interests with respect to brands, colors, price range and many more. While online shopping, buyers tend to search for a number of products. Now, searching a product frequently will make buyer’s Facebook, web pages, search engine or that online store start recommending or showing offers on that particular product. There is no one sitting over there to code such task for each and every user, all this task is completely automatic. Here, ML plays its role. Researchers, data scientists, machine learners build models on machine using good quality and huge amount of data and now their machine is automatically performing and even improving with more and more experience and time.

Traditionally, advertisement was only done using newspapers, magazines and radio but now technology has made us smart enough to do Targeted advertisement(online ad system) which is a way more efficient method to target most receptive audience. - Even in health care also, ML is doing a fabulous job. Researchers and scientists have prepared models to train machines for detecting cancer just by looking at slide – cell images. For humans to perform this task it would have taken a lot of time. But now, no more delay, machines predict the chances of having or not having cancer with some accuracy and doctors just have to give a assurance call, that’s it. The answer to – how is this possible is very simple -all that is required, is, high computation machine, large amount of good quality image data, ML model with good algorithms to achieve state-of-the-art results.

Doctors are using ML even to diagnose patients based on different parameters under consideration. - You all might have use IMDB ratings, Google Photos where it recognizes faces, Google Lens where the ML image-text recognition model can extract text from the images you feed in, Gmail which categories E-mail as social, promotion, updates or forum using text classification,which is a part of ML.

How ML works?- Gathering past data in the form of text file, excel file, images or audio data. The more better the quality of data, the better will be the model learning

- Data Processing – Sometimes, the data collected is in the raw form and it needs to be rectified.

Example: if data has some missing values, then it has to be rectified. If data is in the form of text or images then converting it to numerical form will be required, be it list or array or matrix. Simply, Data is to be made relevant and understandable by the machine - Building up models with suitable algorithms and techniques and then training it.

- Testing our prepared model with data which was not feed in at the time of training and so evaluating the performance – score, accuracy with high level of precision.

- Linear Algebra

- Statistics and Probability

- Calculus

- Graph theory

Pre-requisites to learn ML:

The information which you have provided is very good. It is very useful who is looking for

ReplyDeleteBig data consulting services Singapore

Data Warehousing services Singapore

Data Warehousing services

Data migration services Singapore

Data migration services